I try to maintain my systems updated, It generates problems but unmaintained machines have the incredible ability to create bigger problems. At Calidade Systems for internal infrastructure we choose Ovirt, It’s really easy to add hardware, storage and every month has new improvements, obviously it not a perfect solution, but the perfect virtualization environment does not exists yet.

Last Saturday I decide to update Ovirt server, compute nodes and storage servers. During Ovirt update i saw a message saying something like.

These machines have snapshots in older format and will been incompatible with updated Ovirt version.

Machine XXX

Machine XXX

I assume that this will not be a problem, there were backup snapshots used a long time ago and nowadays are useless. I continue the process updating Ovirt-engine and all was ok, the I start updating virtualization nodes, and two machines doesn’t want to migrate to the new nodes, I decided a pragmatical solution, shut down virtual machines update last nodes and power on after update, these machines aren’t 24*7.

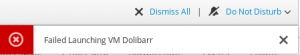

After update I started virtual machines and a beautiful red message appears in screen in one of then

In these moment I remember that was one of the machines that were listed during the update. Damm !! I should remove those snapshots before running upgrade. Looking in ovirt-engine log i found these lines

2019-08-12 14:35:27,469+02 ERROR [org.ovir2019-08-12 14:35:27,469+02 ERROR [org.ovirt.engine.core.dal.dbbroker.auditloghandling.AuditLogDirector] (ForkJoinPool-1-worker-13) [] EVENT_ID: VM_DOWN_ERROR(119), VM Dolibarr is down with error. Exit message: Bad volume specification {'address': {'function': '0x0', 'bus': '0x00', 'domain': '0x0000', 'type': 'pci', 'slot': '0x06'}, 'serial': 'eac6b589-b733-482a-ae87-e66477aab4fd', 'index': 0, 'iface': 'virtio', 'apparentsize': '13116506112', 'specParams': {}, 'cache': 'none', 'imageID': 'eac6b589-b733-482a-ae87-e66477aab4fd', 'truesize': '13116915712', 'type': 'disk', 'domainID': 'a1e7ec14-24b2-497b-a061-2ef0869d58b8', 'reqsize': '0', 'format': 'cow', 'poolID': '506e1ccd-a98a-4441-83a0-51bd0e3a441e', 'device': 'disk', 'path': '/rhev/data-center/506e1ccd-a98a-4441-83a0-51bd0e3a441e/a1e7ec14-24b2-497b-a061-2ef0869d58b8/images/eac6b589-b733-482a-ae87-e66477aab4fd/126eda41-6197-4d77-a682-2dd25314c01f', 'propagateErrors': 'off', 'name': 'vda', 'bootOrder': '1', 'volumeID': '126eda41-6197-4d77-a682-2dd25314c01f', 'diskType': 'file', 'alias': 'ua-eac6b589-b733-482a-ae87-e66477aab4fd', 'discard': False}.t.engine.core.dal.dbbroker.auditloghandling.AuditLogDirector] (ForkJoinPool-1-worker-13) [] EVENT_ID: VM_DOWN_ERROR(119), VM Dolibarr is down with error. Exit message: Bad volume specification {'address': {'function': '0x0', 'bus': '0x00', 'domain': '0x0000', 'type': 'pci', 'slot': '0x06'}, 'serial': 'eac6b589-b733-482a-ae87-e66477aab4fd', 'index': 0, 'iface': 'virtio', 'apparentsize': '13116506112', 'specParams': {}, 'cache': 'none', 'imageID': 'eac6b589-b733-482a-ae87-e66477aab4fd', 'truesize': '13116915712', 'type': 'disk', 'domainID': 'a1e7ec14-24b2-497b-a061-2ef0869d58b8', 'reqsize': '0', 'format': 'cow', 'poolID': '506e1ccd-a98a-4441-83a0-51bd0e3a441e', 'device': 'disk', 'path': '/rhev/data-center/506e1ccd-a98a-4441-83a0-51bd0e3a441e/a1e7ec14-24b2-497b-a061-2ef0869d58b8/images/eac6b589-b733-482a-ae87-e66477aab4fd/126eda41-6197-4d77-a682-2dd25314c01f', 'propagateErrors': 'off', 'name': 'vda', 'bootOrder': '1', 'volumeID': '126eda41-6197-4d77-a682-2dd25314c01f', 'diskType': 'file', 'alias': 'ua-eac6b589-b733-482a-ae87-e66477aab4fd', 'discard': False}.

At this moment I only view 3 options.

- Create a new virtual machine and recover data from a vm data backup

- Revert Ovirt update

- Play with Ovirt Volumes

1st option is the easy way, I had periodical backup of virtual machine data, revert a Ovirt update will took a lot of time and can generate bigger problems so I decide to go directly to the third option.

These virtual machine disks are stored into a nfs, just navigate to the nfs folder.

and you will find a folder with a UUID name, should be the same that domainID

inside you will find 3 folders

- dom_md

- images

- master

we go inside images directory we will see a lot of UUID folders

one of then is the serial that we see in error

'serial': 'eac6b589-b733-482a-ae87-e66477aab4fd'

inside we will found 3 files for each snapshot plus anothe 3 for the hard disk actual state

- 126eda41-6197-4d77-a682-2dd25314c01f

- 126eda41-6197-4d77-a682-2dd25314c01f.lease

- 126eda41-6197-4d77-a682-2dd25314c01f.meta

- 78ba6ba4-0bdb-4b66-9a2e-03c1410cc7bd

- 78ba6ba4-0bdb-4b66-9a2e-03c1410cc7bd.lease

- 78ba6ba4-0bdb-4b66-9a2e-03c1410cc7bd.meta

- cec2eca1-b690-4790-8c33-00552b94023d

- cec2eca1-b690-4790-8c33-00552b94023d.lease

- cec2eca1-b690-4790-8c33-00552b94023d.meta

from these files we will focus on volumeID 126eda41-6197-4d77-a682-2dd25314c01f

If these images are not valid, but they were working before the update, I assume that I can covert it into a qcow image

I copied the folder eac6b589-b733-482a-ae87-e66477aab4fd and hist content into a test machine, a run this command

qemu-img convert -f qcow2 -O qcow2 -c 126eda41-6197-4d77-a682-2dd25314c01f doli.qcow2

It needs time but it worked perfectly, if i start this qcow2 in a qemu i will see my machine start with all the data before shutting down

Now it’s time to add this qcow image to ovirt

Here you will find all the information

after that you only need to restore mac address and another parameters in imported virtual machine